+

+

+

+

+

+

+

+  +

+

+

+

+

+

+

+ This chapter will explain the core architectural component, its context views, and how HelloDATA works under the hood.

+We separate between two main domains, "Business and Data Domain.

+Resources encapsulated inside a Data Domain can be:

+On top, you can add subsystems. This can be seen as extensions that make HelloDATA pluggable with additional tools. We now support CloudBeaver for viewing your Postgres databases, RtD, and Gitea. You can imagine adding almost infinite tools with capabilities you'd like to have (data catalog, semantic layer, specific BI tool, Jupyter Notebooks, etc.).

+Read more about Business and Data Domain access rights in Roles / Authorization Concept.

+

Zooming into several Data Domains that can exist within a Business domain, we see an example of Data Domain A-C. Each Data Domain has a persistent storage, in our case, Postgres (see more details in the Infrastructure Storage chapter below).

+Each data domain might import different source systems; some might even be used in several data domains, as illustrated. Each Data Domain is meant to have its data model with straightforward to, in the best case, layered data models as shown on the image with:

+Data from various source systems is first loaded into the Landing/Staging Area.

+It must be cleaned before the delivered data is loaded into the Data Processing (Core). Most of these cleaning steps are performed in this area.

+The data from the different source systems are brought together in a central area, the Data Processing (Core), through the Landing and Data Storage and stored there for extended periods, often several years.

+Subsets of the data from the Core are stored in a form suitable for user queries.

+Between the layers, we have lots of Metadata

+Different types of metadata are needed for the smooth operation of the Data Warehouse. Business metadata contains business descriptions of all attributes, drill paths, and aggregation rules for the front-end applications and code designations. Technical metadata describes, for example, data structures, mapping rules, and parameters for ETL control. Operational metadata contains all log tables, error messages, logging of ETL processes, and much more. The metadata forms the infrastructure of a DWH system and is described as "data about data".

+

Within a Data Domain, several users build up different dashboards. Think of a dashboard as a specific use case e.g., Covid, Sales, etc., that solves a particular purpose. Each of these dashboards consists of individual charts and data sources in superset. Ultimately, what you see in the HelloDATA portal are the dashboards that combine all of the sub-components of what Superset provides.

+

As described in the intro. The portal is the heart of the HelloDATA application, with access to all critical applications.

+Entry page of helloDATA: When you enter the portal for the first time, you land on the dashboard where you have

+More technical details are in the "Module deployment view" chapter below.

+

Going one level deeper, we see that we use different modules to make the portal and helloDATA work.

+We have the following modules:

+

At the center are two components, NATS and Keycloak. Keycloak, together with the HelloDATA portal, handles the authentication, authorization, and permission management of HelloDATA components. Keycloak is a powerful open-source identity and access management system. Its primary benefits include:

+On the other hand, NATS is central for handling communication between the different modules. Its power comes from integrating modern distributed systems. It is the glue between microservices, making and processing statements, or stream processing.

+NATS focuses on hyper-connected moving parts and additional data each module generates. It supports location independence and mobility, whether the backend process is streaming or otherwise, and securely handles all of it.

+NATs let you connect mobile frontend or microservice to connect flexibly. There is no need for static 1:1 communication with a hostname, IP, or port. On the other hand, NATS lets you m:n connectivity based on subject instead. Still, you can use 1:1, but on top, you have things like load balancers, logs, system and network security models, proxies, and, most essential for us, sidecars. We use sidecars heavily in connection with NATS.

+NATS can be deployed nearly anywhere: on bare metal, in a VM, as a container, inside K8S, on a device, or in whichever environment you choose. And all fully secure.

+Here is an example of subsystem communication. NATS, obviously at the center, handles these communications between the HelloDATA platform and the subsystems with its workers, as seen in the image below.

+The HelloDATA portal has workers. These workers are deployed as extra containers with sidecars, called "Sidecar Containers". Each module needing communicating needs a sidecar with these workers deployed to communicate with NATS. Therefore, the subsystem itself has its workers to share with NATS as well.

+

Everything starts with a web browser session. The HelloDATA user accesses the HelloDATA Portal through HTTP. Before you see any of your modules or components, you must authorize yourself again, Keycloak. Once logged in, you have a Single Sign-on Token that will give access to different business domains or data domains depending on your role.

+The HelloDATA portal sends an event to the EventWorkers via JDBC to the Portal database. The portal database persists settings from the portal and necessary configurations.

+The EventWorkers, on the other side communicate with the different HelloDATA Modules discussed above (Keycloak, NATS, Data Stack with dbt, Airflow, and Superset) where needed. Each module is part of the domain view, which persists their data within their datastore.

+

In this flow chart, you see again what we discussed above in a different way. Here, we assign a new user role. Again, everything starts with the HelloDATA Portal and an existing session from Keycloak. With that, the portal worker will publish a JSON message via UserRoleEvent to NATS. As the communication hub for HelloDATA, NATS knows what to do with each message and sends it to the respective subsystem worker.

+Subsystem workers will execute that instruction and create and populate roles on, e.g., Superset and Airflow, and once done, inform the spawned subsystem worker that it's done. The worker will push it back to NATS, telling the portal worker, and at the end, will populate a message on the HelloDATA portal.

+

+

+

+

+

+

+

+

+ We'll explain which data stack is behind HelloDATA BE.

+The differentiator of HelloDATA lies in the Portal. It combines all the loosely open-source tools into a single control pane.

+The portal lets you see:

+You can find more about the navigation and the features in the User Manual.

+dbt is a small database toolset that has gained immense popularity and is the facto standard for working with SQL. Why, you might ask? SQL is the most used language besides Python for data engineers, as it is declarative and easy to learn the basics, and many business analysts or people working with Excel or similar tools might know a little already.

+The declarative approach is handy as you only define the what, meaning you determine what columns you want in the SELECT and which table to query in the FROM statement. You can do more advanced things with WHERE, GROUP BY, etc., but you do not need to care about the how. You do not need to watch which database, which partition it is stored, what segment, or what storage. You do not need to know if an index makes sense to use. All of it is handled by the query optimizer of Postgres (or any database supporting SQL).

+But let's face it: SQL also has its downside. If you have worked extensively with SQL, you know the spaghetti code that usually happens when using it. It's an issue because of the repeatability—no variable we can set and reuse in an SQL. If you are familiar with them, you can achieve a better structure with CTEs, which allows you to define specific queries as a block to reuse later. But this is only within one single query and handy if the query is already log.

+But what if you'd like to define your facts and dimensions as a separate query and reuse that in another query? You'd need to decouple the queries from storage, and we would persist it to disk and use that table on disk as a FROM statement for our following query. But what if we change something on the query or even change the name we won't notice in the dependent queries? And we will need to find out which queries depend on each other. There is no lineage or dependency graph.

+It takes a lot of work to be organized with SQL. There is also not a lot of support if you use a database, as they are declarative. You need to make sure how to store them in git or how to run them.

+That's where dbt comes into play. dbt lets you create these dependencies within SQL. You can declaratively build on each query, and you'll get errors if one changes but not the dependent one. You get a lineage graph (see an example), unit tests, and more. It's like you have an assistant that helps you do your job. It's added software engineering practice that we stitch on top of SQL engineering.

+The danger we need to be aware of, as it will be so easy to build your models, is not to make 1000 of 1000 tables. As you will get lots of errors checked by the pre-compiling dbt, good data modeling techniques are essential to succeed.

+Below, you see dbt docs, lineage, and templates: +1. Project Navigation +2. Detail Navigation +3. SQL Template +4. SQL Compiled (practical SQL that gets executed) +5. Full Data lineage where with the source and transformation for the current object

+

Or zoom dbt lineage (when clicked):

+

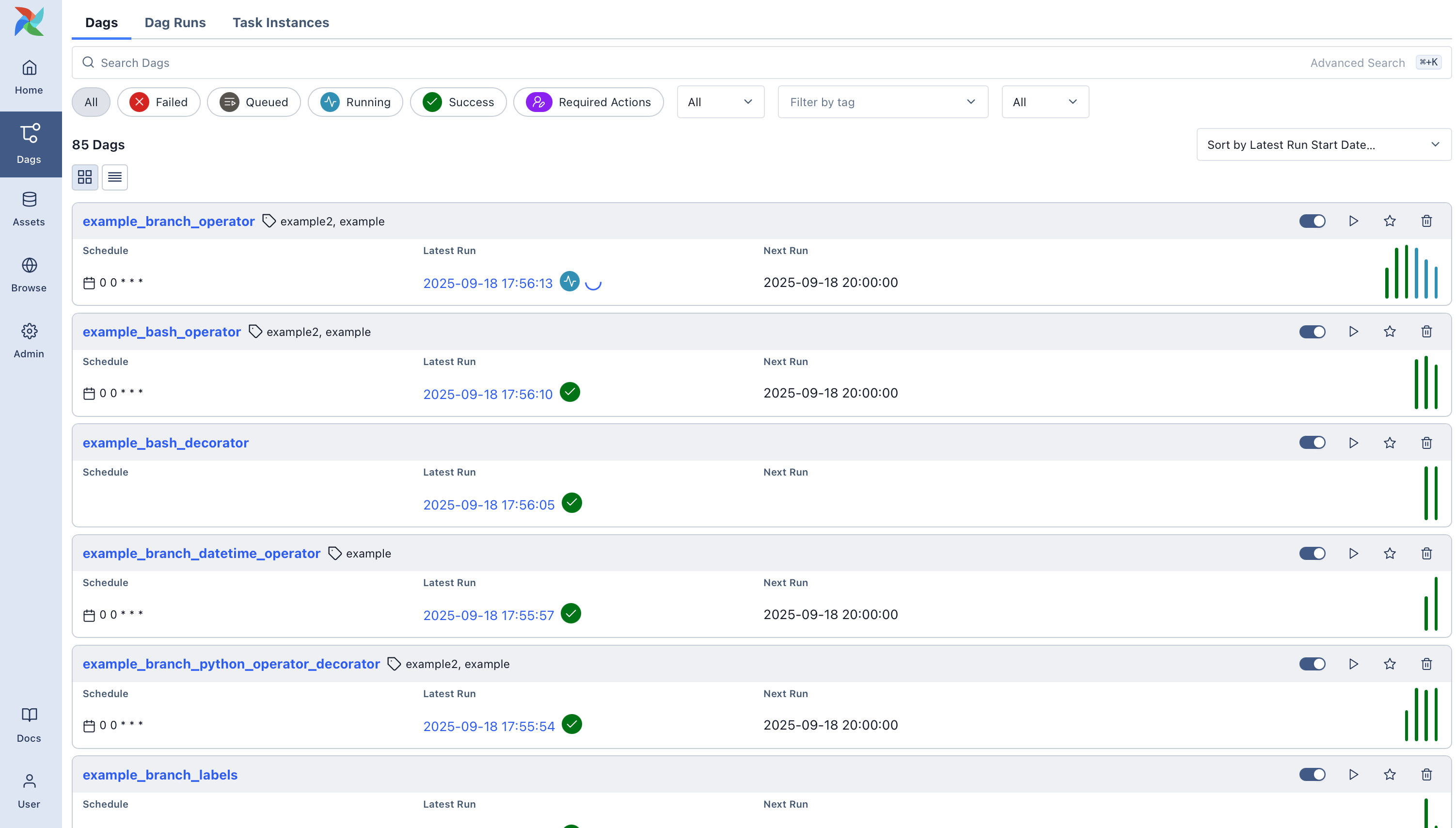

Airflow is the natural next step. If you have many SQLs representing your business metrics, you want them to run on a daily or hourly schedule triggered by events. That's where Airflow comes into play. Airflow is, in its simplest terms, a task or workflow scheduler, which tasks or DAGs (how they are called) can be written programatically with Python. If you know cron jobs, these are the lowest task scheduler in Linux (think * * * * *), but little to no customization beyond simple time scheduling.

Airflow is different. Writing the DAGs in Python allows you to do whatever your business logic requires before or after a particular task is started. In the past, ETL tools like Microsoft SQL Server Integration Services (SSIS) and others were widely used. They were where your data transformation, cleaning and normalisation took place. In more modern architectures, these tools aren’t enough anymore. Moreover, code and data transformation logic are much more valuable to other data-savvy people (data anlysts, data scientists, business analysts) in the company instead of locking them away in a propreitary format.

+Airflow or a general Orchestrator ensures correct execution of depend tasks. It is very flexibile and extensible with operators from the community or in-build capabiliities of the framework itself.

+Airflow DAGs - Entry page which shows you the status of all your DAGs +- what's the schedule of each job +- are they active, how often have they failed, etc.

+Next, you can click on each of the DAGs and get into a detailed view:

+

It shows you the dependencies of your business's various tasks, ensuring that the order is handled correctly.

+

Superset is the entry point to your data. It's a popular open-source business intelligence dashboard tool that visualizes your data according to your needs. It's able to handle all the latest chart types. You can combine them into dashboards filtered and drilled down as expected from a BI tool. The access to dashboards is restricted to authenticated users only. A user can be given view or edit rights to individual dashboards using roles and permissions. Public access to dashboards is not supported.

+

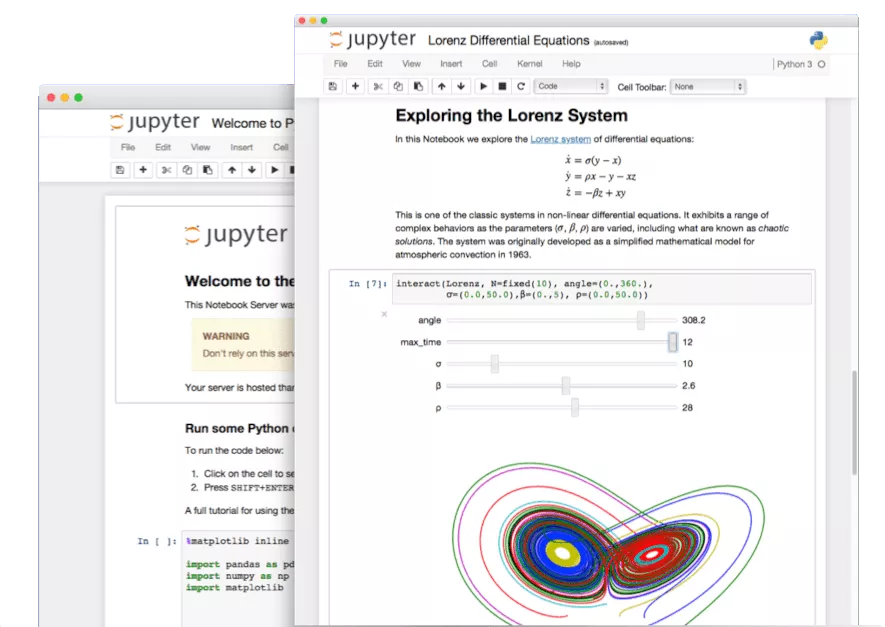

Jupyter Notebooks and Jupyter Hub is an interactive IDE where you can code in Python or R (mainly) and implement your data science models, wrangling and cleaning your data, or visualize the data with charts. You are free to use what the Python or R libraries offer. It's a great tool to work with data interactively and share your results with others.

+Jupyter Hub is a multi-user Hub that spawns, manages, and proxies multiple instances of the single-user Jupyter notebook server. If you haven't heard of Jupyter Hub, you most certanily have seen or heard of Jupyter Notebooks, which turns your web browser into a interactive IDE.

+Jupyter Hub is encapsulating Jupyter Notebooks into a multi-user enviroment with lots of additonal features. In this part, we mostly focus on the feature of Jupyter Notebooks, as these are the once you and users will interact.

+ ]

]

To name a few of Jupyter Notebooks features:

+What Jupyter Hub adds on top:

+Let's start with the storage layer. We use Postgres, the currently most used and loved database. Postgres is versatile and simple to use. It's a relational database that can be customized and scaled extensively.

+ + + + + + + + + + + + + + +

+

+

+

+

+

+

+ Infrastructure is the part where we go into depth about how to run HelloDATA and its components on Kubernetes.

+Kubernetes and its platform allow you to run and orchestrate container workloads. Kubernetes has become popular and is the de-facto standard for your cloud-native apps to (auto-) scale-out and deploy the various open-source tools fast, on any cloud, and locally. This is called cloud-agnostic, as you are not locked into any cloud vendor (Amazon, Microsoft, Google, etc.).

+Kubernetes is infrastructure as code, specifically as YAML, allowing you to version and test your deployment quickly. All the resources in Kubernetes, including Pods, Configurations, Deployments, Volumes, etc., can be expressed in a YAML file using Kubernetes tools like HELM. Developers quickly write applications that run across multiple operating environments. Costs can be reduced by scaling down and using any programming language running with a simple Dockerfile. Its management makes it accessible through its modularity and abstraction; also, with the use of Containers, you can monitor all your applications in one place.

+Kubernetes Namesspaces provides a mechanism for isolating groups of resources within a single cluster. Names of resources need to be unique within a namespace but not across namespaces. Namespace-based scoping is applicable only for namespaced objects (e.g. Deployments, Services, etc) and not for cluster-wide objects (e.g., StorageClass, Nodes, PersistentVolumes, etc).

+

Here, we have a look at the module view with an inside view of accessing the HelloDATA Portal.

+The Portal API serves with SpringBoot, Wildfly and Angular.

+

Following up on how storage is persistent for the Domain View introduced in the above chapters.

+Storage is an important topic, as this is where the business value and the data itself are stored.

+From a Kubernetes and deployment view, everything is encapsulated inside a Namespace. As explained in the above "Domain View", we have different layers from one Business domain (here Business Domain) to n (multiple) Data Domains.

+Each domain holds its data on persistent storage, whether Postgres for relational databases, blob storage for files or file storage on persistent volumes within Kubernetes.

+GitSync is a tool we added to allow GitOps-type deployment. As a user, you can push changes to your git repo, and GitSync will automatically deploy that into your cluster on Kubernetes.

+

Here is another view that persistent storage within Kubernetes (K8s) can hold data across the Data Domain. If these persistent volumes are used to store Data Domain information, it will also require implementing a backup and restore plan for these data.

+Alternatively, blob storage on any cloud vendor or services such as Postgres service can be used, as these are typically managed and come with features such as backup and restore.

+

HelloDATA uses Kubernetes jobs to perform certain activities

+Contents:

+

HelloDATA can be operated as different platforms, e.g. development, test, and/or production platforms. The deployment is based on common CICD principles. It uses GIT and flux internally to deploy its resources onto the specific Kubernetes clusters. +In case of resource shortages, the underlying platform can be extended with additional resources upon request. +Horizontal scaling of the infrastructure can be done within the given resources boundaries (e. g. multiple pods for Superset.)

+See at Roles and authorization concept.

+ + + + + + + + + + + + + +