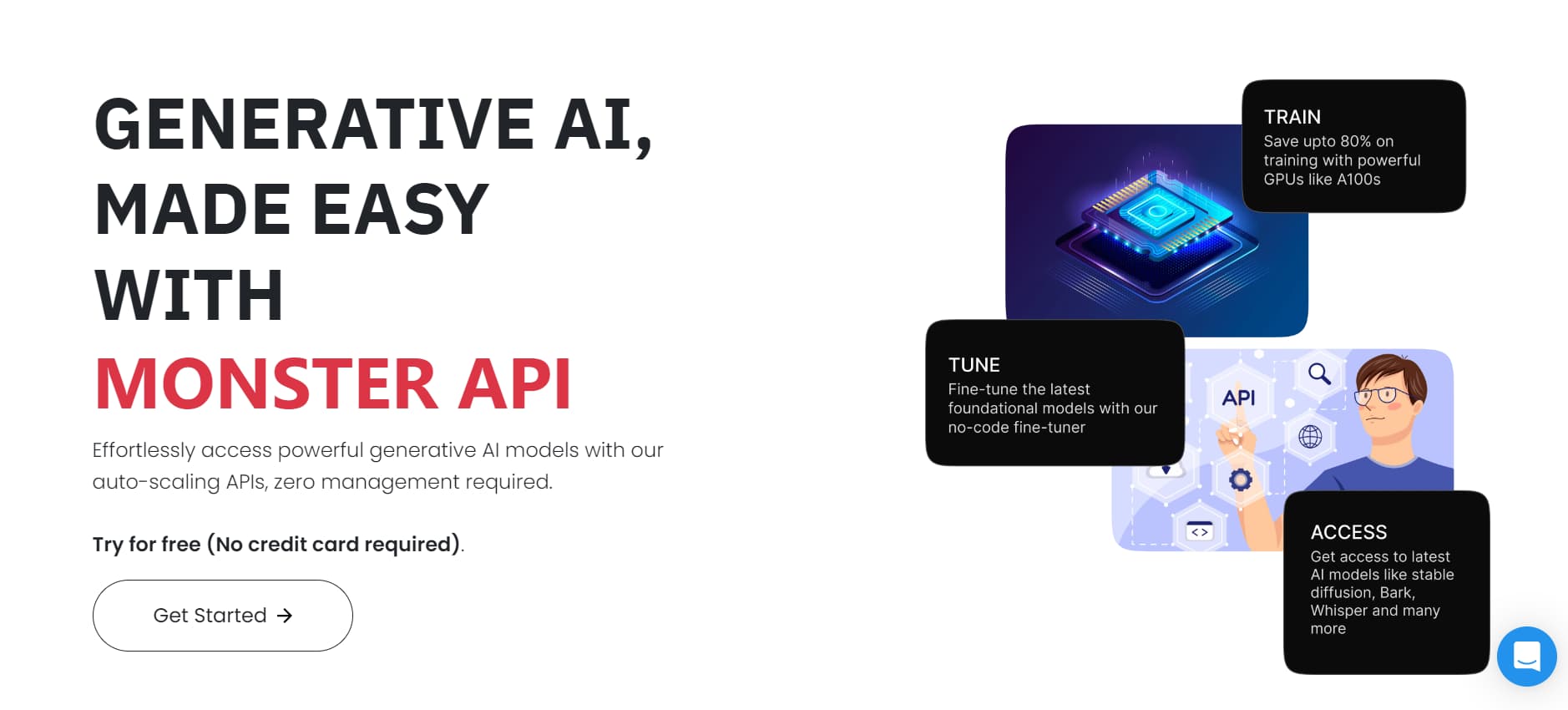

Monster API is a platform that streamlines the deployment and fine-tuning of large language models (LLMs). Their product, MonsterGPT, simplifies the process by using a chat-based interface, eliminating the need for complex technical setup. With MonsterAPI, developers can quickly deploy and customize LLMs for various applications like code generation, sentiment analysis, and classification, without the hassle of managing infrastructure or intricate fine-tuning parameters. The platform aims to make LLM technology more accessible and efficient for a wider range of users.

monsterapi

const { LLMInterface } = require('llm-interface');

LLMInterface.setApiKey({'monsterapi': process.env.MONSTERAPI_API_KEY});

async function main() {

try {

const response = await LLMInterface.sendMessage('monsterapi', 'Explain the importance of low latency LLMs.');

console.log(response.results);

} catch (error) {

console.error(error);

throw error;

}

}

main();The following model aliases are provided for this provider.

default: meta-llama/Meta-Llama-3-8B-Instructlarge: google/gemma-2-9b-itsmall: microsoft/Phi-3-mini-4k-instructagent: google/gemma-2-9b-it

The following parameters can be passed through options.

add_generation_prompt: If true, adds the generation prompt to the chat template.add_special_tokens: If true, adds special tokens to the prompt.best_of: Number of completions to generate and return the best.early_stopping: Whether to stop generation early.echo: If set to true, the input prompt is echoed back in the output.frequency_penalty: Penalizes new tokens based on their existing frequency in the text so far, reducing the likelihood of repeating the same line. Positive values reduce the frequency of tokens appearing in the generated text.guided_choice: Choices that the output must match exactly.guided_decoding_backend: Overrides the default guided decoding backend for this request.guided_grammar: If specified, output follows the context-free grammar.guided_json: If specified, output follows the JSON schema.guided_regex: If specified, output follows the regex pattern.guided_whitespace_pattern: Overrides the default whitespace pattern for guided JSON decoding.ignore_eos: Whether to ignore the end-of-sequence token.include_stop_str_in_output: Whether to include the stop string in the output.length_penalty: Penalty for length of the response.logit_bias: An optional parameter that modifies the likelihood of specified tokens appearing in the model-generated output.logprobs: Includes the log probabilities of the most likely tokens, providing insights into the model's token selection process.max_tokens: The maximum number of tokens that can be generated in the chat completion. The total length of input tokens and generated tokens is limited by the model's context length.min_p: Minimum probability threshold for token selection.min_tokens: Minimum number of tokens in the response.n: Specifies the number of responses to generate for each input message. Note that costs are based on the number of generated tokens across all choices. Keeping n as 1 minimizes costs.presence_penalty: Penalizes new tokens based on whether they appear in the text so far, encouraging the model to talk about new topics. Positive values increase the likelihood of new tokens appearing in the generated text.repetition_penalty: Penalizes new tokens based on whether they appear in the prompt and the generated text so far. Values greater than 1 encourage the model to use new tokens, while values less than 1 encourage the model to repeat tokens.response_format: Defines the format of the AI's response. Setting this to { "type": "json_object" } enables JSON mode, ensuring the message generated by the model is valid JSON.seed: A random seed for reproducibility. If specified, the system will attempt to sample deterministically, ensuring repeated requests with the same seed and parameters return the same result. Determinism is not guaranteed.skip_special_tokens: Whether to skip special tokens in the response.spaces_between_special_tokens: Whether to include spaces between special tokens.stop: Up to 4 sequences where the API will stop generating further tokens.stop_token_ids: Token IDs at which to stop generating further tokens.stream: If set, partial message deltas will be sent, similar to ChatGPT. Tokens will be sent as data-only server-sent events as they become available, with the stream terminated by a data: [DONE] message.stream_options: Options for streaming responses.temperature: Controls the randomness of the AI's responses. A higher temperature results in more random outputs, while a lower temperature makes the output more focused and deterministic. Generally, it is recommended to alter this or top_p, but not both.tool_choice: Specifies which external tools the AI can use to assist in generating its response.tools: A list of external tools available for the AI to use in generating responses.top_k: The number of highest probability vocabulary tokens to keep for top-k sampling.top_logprobs: Details not available, please refer to the LLM provider documentation.top_p: Controls the cumulative probability of token selections for nucleus sampling. It limits the tokens to the smallest set whose cumulative probability exceeds the threshold. It is recommended to alter this or temperature, but not both.use_beam_search: Whether to use beam search for generating completions.user: Identifier for the user making the request.

- Streaming

- Tools

Free Tier Available: The Monster API is a commercial product but offers a free tier. No credit card is required to get started.

To get an API key, first create a Monster API account, then visit the link below.

Monster API documentation is available here.