Jiaqi Chen*, Xiaoye Zhu*, Tianyang Liu*, Ying Chen, Xinhui Chen,

Yiwen Yuan, Chak Tou Leong, Zuchao Li†, Tang Long, Lei Zhang,

Chenyu Yan, Guanghao Mei, Jie Zhang†, Lefei Zhang†

*Equal contribution.

†Equal contribution of corresponding author.

| Website |

Paper |

Data |

Model |

Demo |

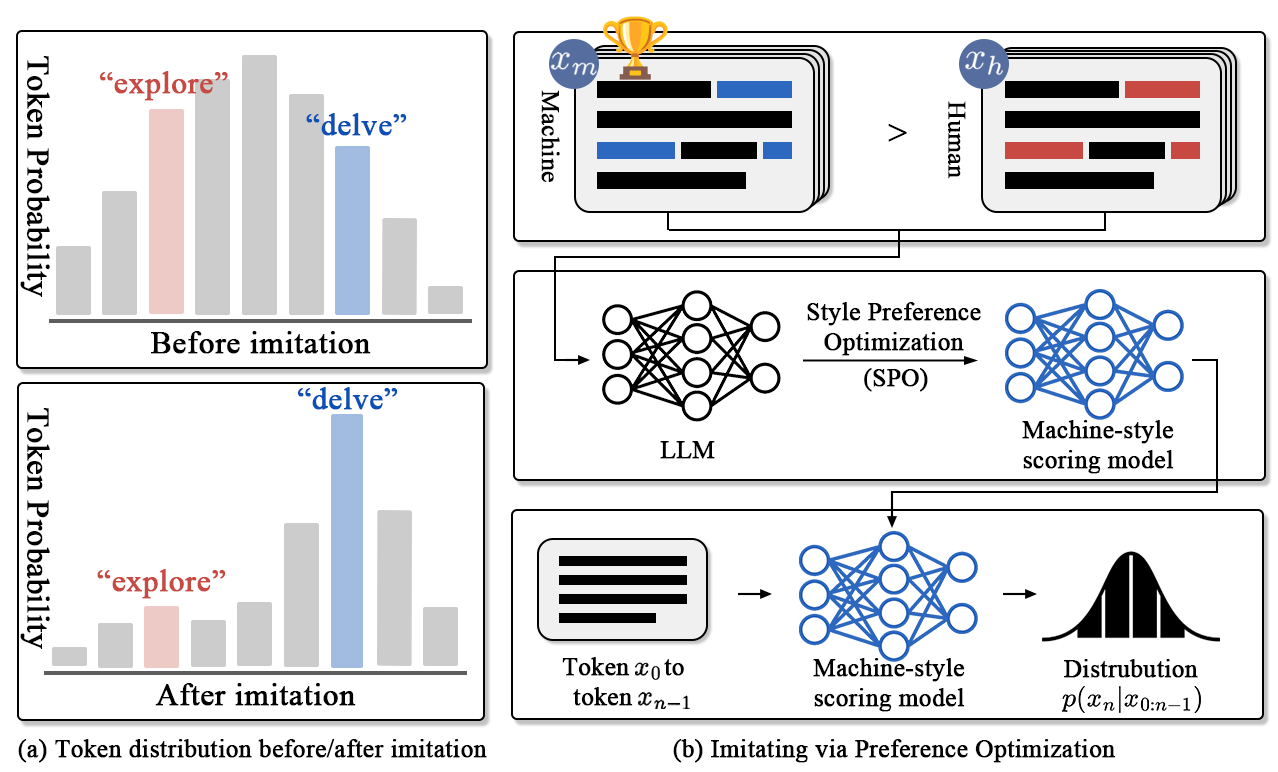

Detecting machine-revised text remains a challenging task as it often involves subtle style changes embedded within human-originated content. The ImBD framework introduces a novel approach to tackle this problem, leveraging style preference optimization (SPO) and Style-CPC to effectively capture machine-style phrasing. Our method achieves state-of-the-art performance in detecting revisions by open-source and proprietary LLMs like GPT-3.5 and GPT-4o, demonstrating significant efficiency with minimal training data.

We are excited to share our code and data to support further exploration in detecting machine-revised text. We welcome your feedback and invite collaborations to advance this field together!

- [2024, Dec 16] Our online demo is available on hugging-face now!

- [2024, Dec 13] Our model and local inference code are available!

- [2024, Dec 9] 🎉🎉 Our paper has been accepted by AAAI 25!

- [2024, Dec 7] We've released our website!

Environment setup

conda create -n ImBD python=3.10

conda activate ImBD

pip install -r requirements.txtDownload necessary models to ./models

bash scripts/download_model.sh[GPU memories needed for inference: ~11G]

We provide a script to download our inference checkpoint from huggingface. (Make sure you have download the above model since our inference checkpoint only contains lora weights)

bash scripts/download_inference_checkpoint.shYou can also finetune and save the model from scratch according to our Reproduce Results part.

Next, run the following script to launch the demo:

bash scripts/run_inference.shThere are two args in this script:

--task could be one of ["polish", "generate", "rewrite", "expand", "all"] . all denotes all-in-one combining the above four tasks, whose accuracy may not be as high as for a single task

--detail could be True or False, indicating whether to show the results of the four tasks.

[GPU memories needed for training and evaluation: ~40G]

Tuning the gpt-neo-2.7b model with SPO (recommend)

bash scripts/train_spo.shOr download our full checkpoint without tuning again.

bash scripts/download_full_checkpoint.shEval tuned model on our multi-domain datasets

# For polish task

bash scripts/eval_spo_polish.sh

# For rewrite task

bash scripts/eval_spo_rewrite.sh

# For expand task

bash scripts/eval_spo_expand.sh

# For generation task

bash scripts/eval_spo_generation.shThe following script will train the model of corresponding language and automatically evaluate the model's result, including Spanish, Portuguese and Chinese.

bash scripts/train_spo_multilang.shFirst Download other models

bash scripts/download_other_models.sh

Then Eval other models on our datasets

# For polish task

bash scripts/eval_other_models_polish.sh

# For rewrite task

bash scripts/eval_other_models_rewrite.sh

# For expand task

bash scripts/eval_other_models_expand.sh

# For generation task

bash scripts/eval_other_models_generation.shEval Roberta models on our datasets

# For four tasks

bash eval_supervised.shTrain and eval sft/rlhf/orpo models on our datasets

# SFT

python ablation_exp/train_gpt_neo_sft.py

# RLHF

python ablation_exp/train_gpt_neo_rlhf.py

# ORPO

python ablation_exp/train_gpt_neo_orpo.py

# Eval

bash scripts/eval_ablation.shDownload text-generation models

Notes: You need to first apply for corresponding model download permission and fill the HF_TOKEN= in the download script, then remove the comments if you need to regenerate the datasets

bash scripts/download_other_models.sh

Build Data using opensource models

bash scripts/build_data.sh

We provide related codes in tools/data_builder_gpts. Make sure you fill the api_key and set the right path to save results.

- Inference code for detection.

- Optimize the preservation of the trained model.

- LoRA checkpoint for inference (without loading two full model checkpoint)

- Optimize GPU memory usage for evaluation scripts.