-

Notifications

You must be signed in to change notification settings - Fork 120

Home

Awantik Das edited this page Oct 27, 2017

·

14 revisions

Course Details PySpark Course Details

- Apache Spark is a fast distributed computing framework abstracting internal details from developer.

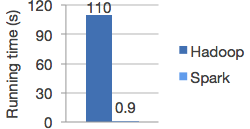

- Comparing with Hadoop Map-Reduce.

- Many data processing people already use python. Computing power limits their scale. PySpark comes as a saviour. Cut down coffee time.

- Unlike in 1.6, 2.X version of Spark provides computation power same as Scala.

- Python an easier language to learn & huge community support.

- APIs similar to Pandas & scikit-learn. Thus, low entry level barrier.

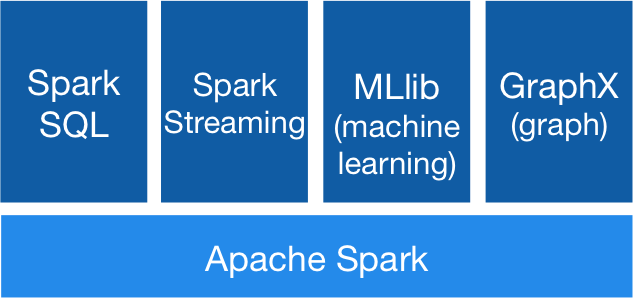

- Components of Spark

- Runs everywhere