Facial Landmarks Detection (Have a general knowledge)

Perspective Transformation Wiki

An Example for getPerspective (opencv Doc).

- Find a Vector Image as mask filter like below

-

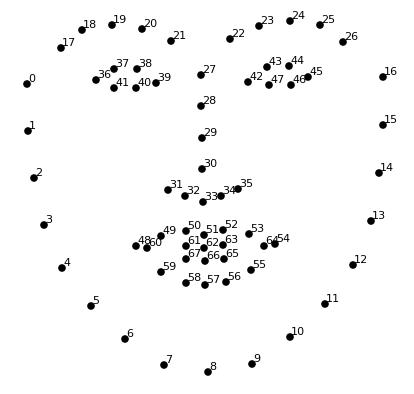

Detect Face Landmarks from an image

Recommended face landmark detection library dlib, and open source Face Analysis Toolkit OpenFace

-

Anchor Point

Anchor point is the point of face landmarks that aligns with the center of your mask filter.

Example:

anchor_pt = [landmarks[27, 0], landmarks[27, 1]] -

The most important part is how to decide Four pairs of "Perspective Points" for sticking mask filter.

Note: no more than three can be on the same line.

Because size of the mask filter is (600 x 200), the original perspective points are:

orgn_per_pts = [[0, 0], [599, 0], [299, 99], [299,199]]It can also be the four corners of the mask filter.

Project the mask filter to the corresponding four points.

# Decide Face Rotate angle face_left_extreme = [landmarks[0, 0], landmarks[0, 1]] face_right_extreme = [landmarks[16, 0], landmarks[16, 1]] x_dif = face_right_extreme[0] - face_left_extreme[0] y_dif = face_right_extreme[1] - face_left_extreme[1] face_roll_angle = math.atan2(y_dif, x_dif) per_tl = [landmarks[17][0] - 10 + 10 * math.sin(pose[2]), landmarks[17][1] - 10 * math.cos(pose[2])] per_tr = [landmarks[26][0] + 10 + 10 * math.sin(pose[2]), landmarks[26][1] - 10 * math.cos(pose[2])] per_3 = [landmarks[17][0] - 10 - 30 * math.sin(pose[2]), landmarks[17][1] + 30 * math.cos(pose[2])] per_4 = [landmarks[26][0] + 10 - 30 * math.sin(pose[2]), landmarks[26][1] + 30 * math.cos(pose[2])] dest_per_pts = [per_tl, per_tr, per_3, per_4]the major goal in this step is to give an accurate estimation of destination perspective points for projecting mask filter onto the face.

-

Find Perspective Transformation

M = cv2.getPerspectiveTransform(orgn_per_pts, dest_per_pts) -

Project mask filter

p_mask = cv2.warpPerspective(mask_filter, M, (face_img.shape[1], face_img.shape[0])) -

Overlay the mask to face image

def overlay(face_img, overlay_img): # Mask RGB info overlay_rgb = overlay_img[:, :, :3] # Opacity value overlay_mask = overlay_img[:, :, 3:] # Background bkgd_mask = 255 - overlay_mask overlay_mask = cv2.cvtColor(overlay_mask, cv2.COLOR_GRAY2BGR) bkgd_mask = cv2.cvtColor(bkgd_mask, cv2.COLOR_GRAY2BGR) other_part = (face_img * (1 / 255.0)) * (bkgd_mask * (1 / 255.0)) overlay_part = (overlay_rgb * (1 / 255.0)) * (overlay_mask * (1 / 255.0)) final_img = cv2.addWeighted(other_part, 255.0, overlay_part, 255.0, 0.0) return final_img

We have previously recommended OpenFace, it provides estimation for head pose, which includes head's rotative movements: raw pitch and yaw. Estimations are provided in speech.csv file at each frame. Since relative pose between face and masks are assumed to be fixed, roll angle could replace the face_roll_angle calculated above. pitch angle can also help us determine four "perspective points" in the image so as to improve the mask filtering effect.

Three axes are shown in the image above, counter-clockwise is the positive rotation direction for each axis. Rz is the axis which points backwards and also the axis head rolls along with, roll angles are given in pose_Rz column of the speech.csv. As well as pitch value in pose_Rx colum and yaw in pose_Ry column.